The 12 Best LLM Optimization Tools for AI Visibility in 2026

Updated December 20, 2025

As we head into 2026, the digital landscape is undergoing its most significant transformation since the dawn of the search engine. Traditional SEO is no longer enough. The new frontier is AI visibility, which simply means ensuring your brand is accurately and authoritatively cited, referenced, and recommended by AI answer engines like Google’s AI Overviews, Perplexity, and ChatGPT. When a user asks a question, is your brand the source of the answer? That is the new measure of success. According to Gartner, by 2026, search engine volume is projected to drop by 25% as users increasingly turn to AI chatbots for information, making AI visibility a critical battleground for market share.

Achieving this requires a strategic approach far beyond standard SEO practices. It involves meticulously optimizing your content for machine comprehension, tracking brand mentions within generative AI responses, and continuously refining the data that AI models use to form their answers. This practice, often called generative SEO, depends on a deep understanding of What is Semantic SEO to ensure your content aligns with user intent and complex AI algorithms. Without the right tools, this process is like navigating a maze blindfolded. You are left guessing whether your content resonates with the models that now act as the primary gatekeepers to information.

This comprehensive guide cuts through the noise. We have compiled a detailed list of the best LLM optimization tools for AI visibility, designed to give your team the control and insight needed to thrive. For each platform, you will find a clear breakdown of its core features, ideal use cases, practical integration notes, and pricing information. Complete with screenshots and direct links, this resource is your roadmap to finding the perfect tools to monitor, measure, and enhance your presence in the age of AI powered search.

1. Riff Analytics

Riff Analytics emerges as a powerful, dedicated platform for brands serious about winning in the era of generative AI. Positioned as one of the best LLM optimization tools for AI visibility, it moves beyond theoretical advice, offering a comprehensive suite of features to track, analyze, and improve your brand’s presence across the most influential AI engines. Its core strength lies in translating complex AI response data into an actionable roadmap for SEO, content, and brand teams.

The platform provides a unified dashboard to monitor your brand’s citations and mentions across a wide array of systems, including ChatGPT, Perplexity, Claude, Gemini, and Google AI Overviews. This multi engine coverage is critical, as user preferences for AI assistants are still evolving. Riff doesn't just tell you if you were mentioned; it shows you precisely which content assets are being cited as sources, revealing the exact pages that AI models trust.

Exploring AI Visibility Tracking with Riff Analytics

Riff Analytics excels at citation source mapping and competitive gap analysis. You can quickly see which URLs AI engines are referencing for key topics and, more importantly, identify where your competitors are being cited instead of you. This functionality turns AI optimization from a guessing game into a data driven strategy. For instance, an SEO manager can use the platform’s AI readiness audit to instantly pinpoint high priority pages that need content updates to better align with what AI models are looking for. To dig deeper into this approach, Riff provides excellent resources to help teams understand the nuances of this new landscape. Learn more about optimizing for AI search engines on riffanalytics.ai.

Practical Application for Generative SEO

- Ideal Use Case: A B2B SaaS company wants to ensure its product is the top recommendation for industry specific queries in both Google's AI Overviews and ChatGPT. Using Riff, the marketing team can track these queries, analyze the sources cited for competitor mentions, and adjust their own content strategy to build authority on those specific topics.

- Integration: Riff requires no technical setup and delivers insights within minutes. It fits directly into an existing AI SEO workflow by providing the "why" behind your performance. After identifying citation gaps in Riff, teams can use their traditional SEO and content tools to execute the necessary on page optimizations.

- Access: The platform offers a 7 day free trial and a complimentary AI visibility report to demonstrate its value quickly. While specific pricing is not public, plans are available that grant full access to its core optimization features, requiring users to sign up or contact their team for details.

- Limitations: The lack of public pricing and customer case studies on its website may be a hurdle for teams needing immediate budget validation or social proof before engaging with the sales process.

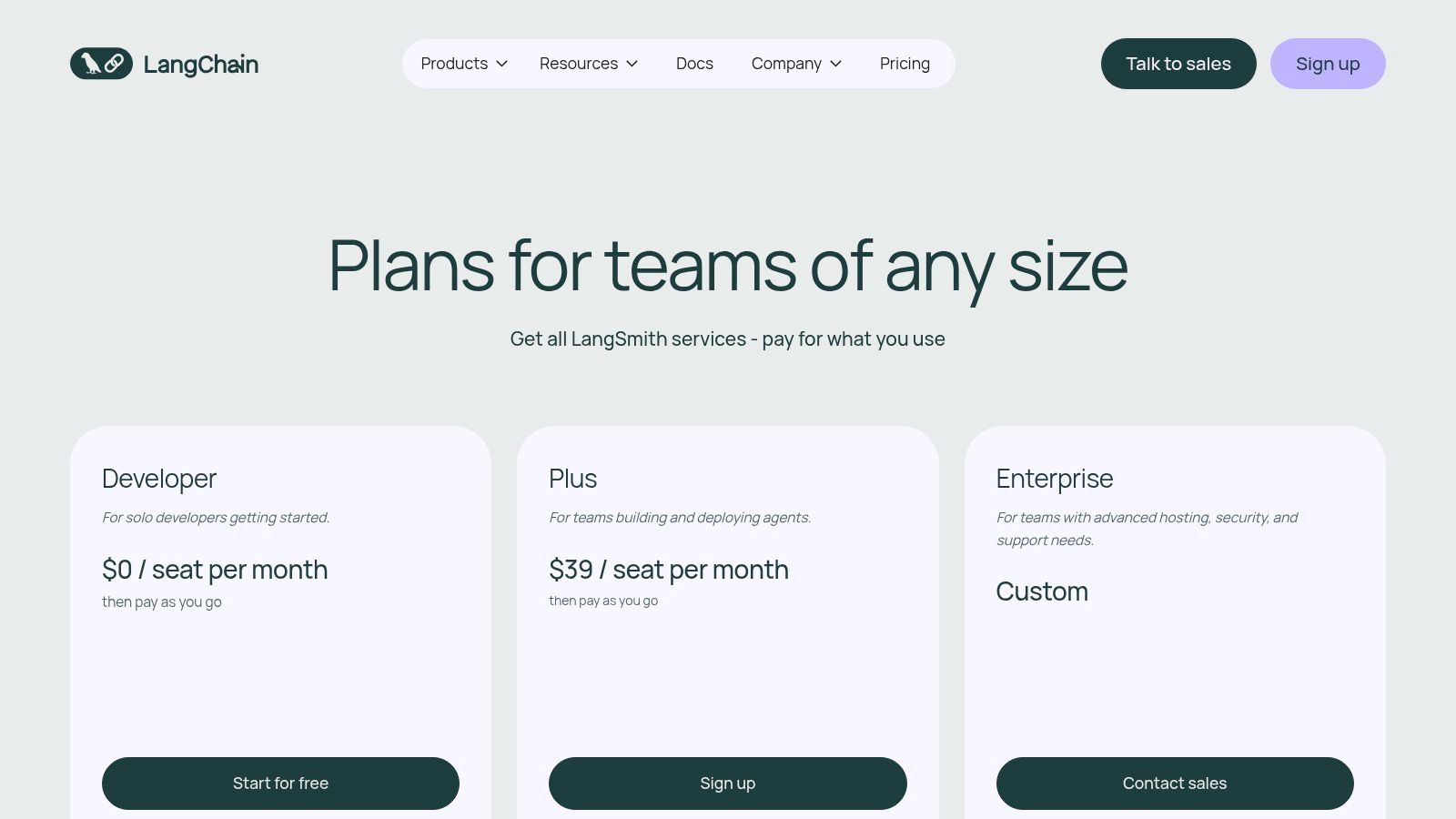

2. LangChain – LangSmith

LangSmith is the observability and testing platform for the popular LangChain framework, offering a powerful way to debug, evaluate, and monitor LLM applications. For teams building their own AI agents or complex prompt chains, it provides unmatched visibility into the inner workings of their systems. This makes it one of the best LLM optimization tools for AI visibility from a development perspective, allowing teams to refine how their applications process information and generate responses.

Its core strength lies in end to end tracing. Developers can visualize every step of an LLM call, from the initial prompt to the final output, including all intermediate function calls and tool usage. This granular view is essential for identifying bottlenecks, reducing latency, and ensuring the application behaves as expected.

A Top LLM Tracking Tool for Developers

LangSmith stands out because it's deeply integrated with the LangChain and LangGraph ecosystems, making it a natural choice for teams already using these frameworks.

- End to End Tracing: Debug complex chains and agents by viewing every step, including prompts, model calls, and metadata.

- Evaluation Engine: Create datasets to test prompts and model versions offline and online, ensuring quality before deployment.

- Monitoring and Analytics: Track token usage, costs, and latency to optimize performance and manage expenses.

A key benefit is its robust evaluation framework, which helps developers ensure that any changes they make to their prompts or models lead to better, more accurate outputs. By improving application quality at the source, you can indirectly enhance how your content or data is interpreted and presented in generative AI systems. This foundational work is crucial for anyone looking to learn more about improving visibility in AI search environments.

Pricing and Onboarding

LangSmith offers a startup friendly "Plus" plan starting at $20 per month, which includes a generous allocation of traces, data points, and evaluation runs. The "Pro" and "Enterprise" tiers offer extended data retention, advanced security features like SSO and RBAC, and dedicated support for larger teams with more demanding operational needs. The self serve onboarding process is straightforward, allowing developers to integrate the platform and start tracing their applications quickly.

3. Weights & Biases (W&B) – Weave

Weights & Biases extends its classic machine learning experiment tracking into the LLM space with Weave, a toolkit designed for tracing, evaluating, and monitoring generative AI applications. It uniquely bridges the gap between traditional model development and modern LLM application management, making it an excellent choice for teams that need a unified view of both. For those looking to correlate model training telemetry with real world application performance, W&B Weave offers an integrated solution.

Its primary advantage is providing a single platform for the entire machine learning lifecycle. Teams can track model training runs and then seamlessly transition to monitoring the LLM application built on that model, viewing everything from prompt inputs and outputs to latency and token costs. This holistic perspective makes it one of the best LLM optimization tools for AI visibility, especially for organizations with mature MLOps practices.

Integrated LLM Tracking with W&B Weave

Weave is built for teams that require a comprehensive and integrated toolchain, connecting deep model experimentation with production level application monitoring.

- Unified Tracing and Monitoring: Track LLM calls alongside traditional model training metrics, viewing latency, inputs, outputs, and costs in one place.

- Evaluation and Datasets: Create and manage evaluation datasets to score model and prompt performance, with automations for CI/CD pipelines.

- Enterprise Ready Deployment: Offers robust cloud and on premise deployment options complete with enterprise grade support and security.

The ability to manage both model experiments and application telemetry in a single dashboard is a key differentiator. This allows developers to see how a fine tuned model's performance metrics directly impact the latency and quality of the final user facing application, providing a clear path for optimization.

Pricing and Onboarding

W&B offers a free tier for individual public projects. The "Pro" team plan, starting at $33 per user per month, provides private projects and enhanced collaboration features. For larger organizations requiring advanced security, dedicated support, and scalable deployment options, custom "Enterprise" pricing is available. The platform is well documented with clear examples, facilitating a smooth onboarding process for development teams.

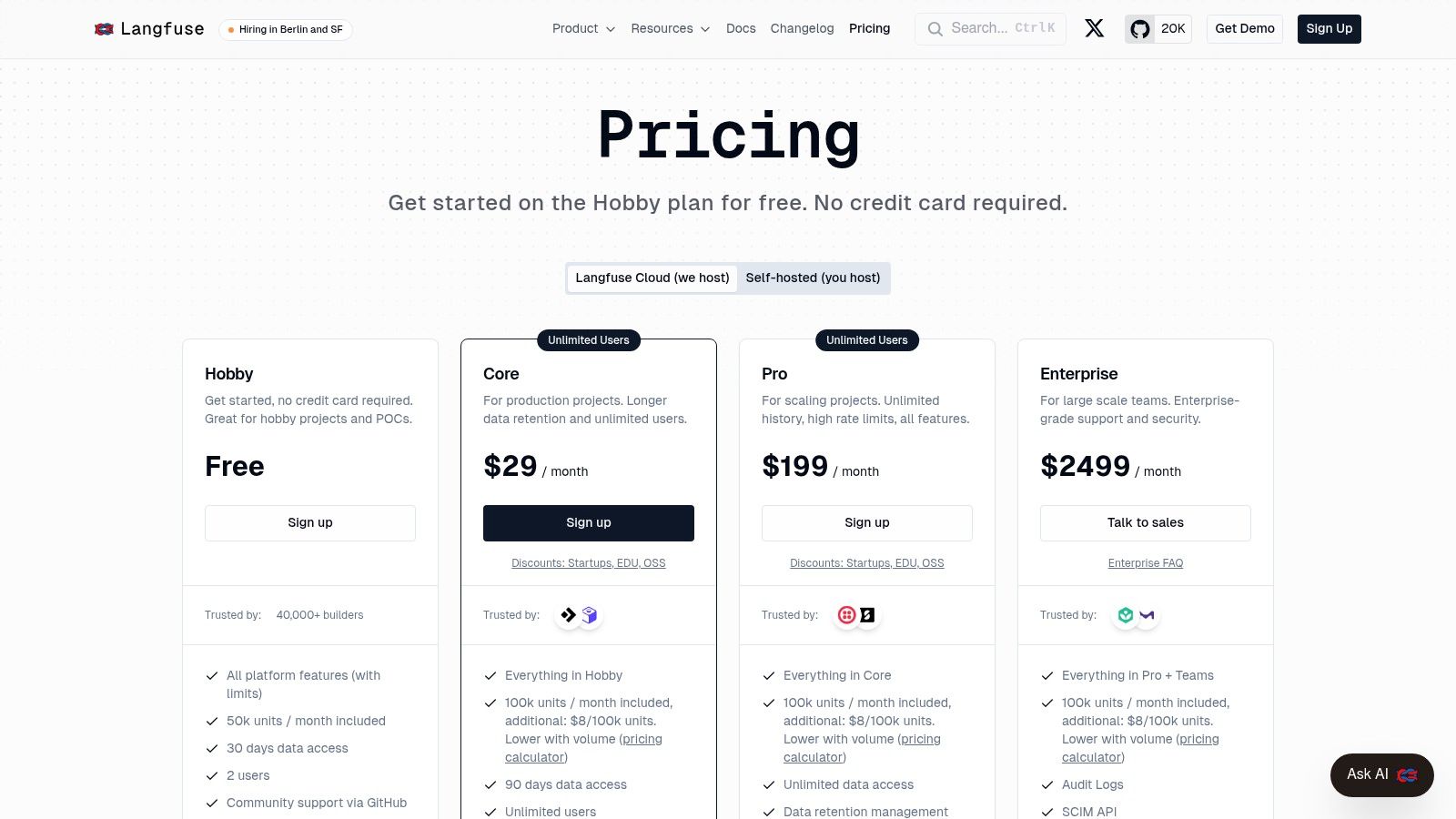

4. Langfuse

Langfuse is a comprehensive, open source platform for LLM observability, offering tools for tracing, evaluation, and analytics. It is a powerful option for teams that prioritize self hosting, cost control, or an active open source community driving product development. For developers and teams seeking granular control over their LLM application's performance, Langfuse provides the necessary framework to debug, monitor, and iterate effectively. Its open source nature makes it a uniquely flexible and transparent choice among the best LLM optimization tools for AI visibility.

The platform excels at providing detailed traces of LLM application runs, which allows developers to understand exactly how prompts are processed and responses are generated. This deep insight is crucial for identifying performance issues, optimizing token usage, and ensuring the application functions as intended, which in turn supports better generative AI outcomes.

An Open Source Tool for AI Search Visibility

Langfuse stands out with its open source foundation, giving teams the ultimate flexibility in deployment and customization. This makes it ideal for organizations with strict data privacy requirements or those wanting to avoid vendor lock in.

- Detailed Tracing: Capture and visualize complex LLM chains, including all inputs, outputs, and metadata for thorough debugging.

- Prompt Management: Version and manage prompts within a collaborative environment, making it easy to test and deploy improvements.

- Evaluation and Analytics: Create datasets to evaluate model and prompt performance, and monitor key metrics like cost, latency, and quality over time.

A significant advantage of Langfuse is its active community and rapid iteration cycle. For teams focused on generative SEO, this means the platform is continuously evolving to meet new challenges, helping them refine how their LLM applications interpret and present information for AI search visibility.

Pricing and Onboarding

Langfuse Cloud offers a generous free tier for individuals and small projects. Paid plans are usage based, calculated in "units," with a transparent pricing calculator available on their website. The "Pro" and "Team" plans offer higher limits and advanced features, while Enterprise tiers provide compliance options like SOC2 reports and HIPAA BAAs. For teams preferring full control, the open source version can be self hosted.

5. Datadog – LLM Observability

For enterprises already leveraging Datadog for infrastructure and application monitoring, LLM Observability provides a powerful, integrated solution to extend that visibility into their AI stack. It excels at correlating LLM performance with the rest of your system, making it one of the best LLM optimization tools for AI visibility from a full stack perspective. Teams can monitor request traces, track costs, and evaluate model quality within the same platform they use for logs, metrics, and APM.

This unified approach is Datadog’s key differentiator. Instead of treating LLM performance in isolation, it allows developers and operations teams to see how an LLM’s latency, cost, or a spike in errors impacts the entire user facing application. This holistic view is crucial for maintaining reliability and optimizing resource allocation in production environments.

Full Stack LLM Tools for AI Visibility

Datadog is ideal for organizations that need to connect LLM behavior to broader system health and business metrics, providing a single source of truth for observability.

- Unified Tracing: Correlate LLM request traces, prompts, and outputs with application performance metrics (APM), logs, and infrastructure health.

- Cost and Quality Monitoring: Automatically track token usage and associated costs while monitoring for safety issues or quality degradation using built in guardrails.

- Experimentation Framework: Compare the performance, cost, and output quality of different prompts, models, and configurations side by side to inform optimization decisions.

Its strength lies in providing enterprise grade monitoring that ties everything together. By understanding how your LLM performs in the context of your entire infrastructure, you gain deeper insights. This level of oversight is essential for any comprehensive strategy, and you can learn more about how it fits into the broader picture of AI-powered brand monitoring.

Pricing and Onboarding

Datadog offers flexible annual and on demand pricing for its LLM Observability product, which is based on the volume of indexed spans. While powerful, it can be more expensive than standalone tools, especially at scale, and may include minimum volume commitments. The platform is best suited for organizations already invested in the Datadog ecosystem, as onboarding involves integrating it with existing application monitoring setups.

6. Openlayer

Openlayer is an automated evaluation and monitoring platform designed for teams who want to catch LLM regressions and measure quality before and after deployment. It integrates directly into CI/CD pipelines, using LLM as a judge tests, tracing, and alerts to create a robust pre production testing environment. This makes it one of the best LLM optimization tools for AI visibility, especially for engineering heavy teams focused on automated quality assurance and preventing model performance degradation over time.

Its primary strength is its emphasis on automated regression workflows. By establishing baseline performance metrics, teams can ensure that any new changes, whether to the model, prompts, or data, do not negatively impact the application's output. This rigorous testing framework helps maintain the accuracy and relevance of AI generated content, which is fundamental to achieving and sustaining positive brand visibility in AI search results.

Automated Evaluation for Generative AI SEO

Openlayer excels in environments where predictable performance and automated testing are critical, making it a powerful choice for cross functional teams of engineers and product managers.

- Automated Evaluations: Leverage over 100 built in and customizable evaluation metrics, including checks for hallucinations, relevance, and PII.

- Git Based Workflows: Integrates with your version control system to trigger evaluations automatically with every pull request, catching issues before they reach production.

- Tracing and Observability: Monitor application performance in real time with comprehensive tracing and configurable alerts to quickly address live issues.

The platform is designed to scale from individual developers to large enterprises, offering SDKs and APIs for seamless integration. By automating the quality control process, teams can focus more on innovation while trusting that their application’s outputs remain reliable and high quality, directly impacting brand perception within generative AI ecosystems.

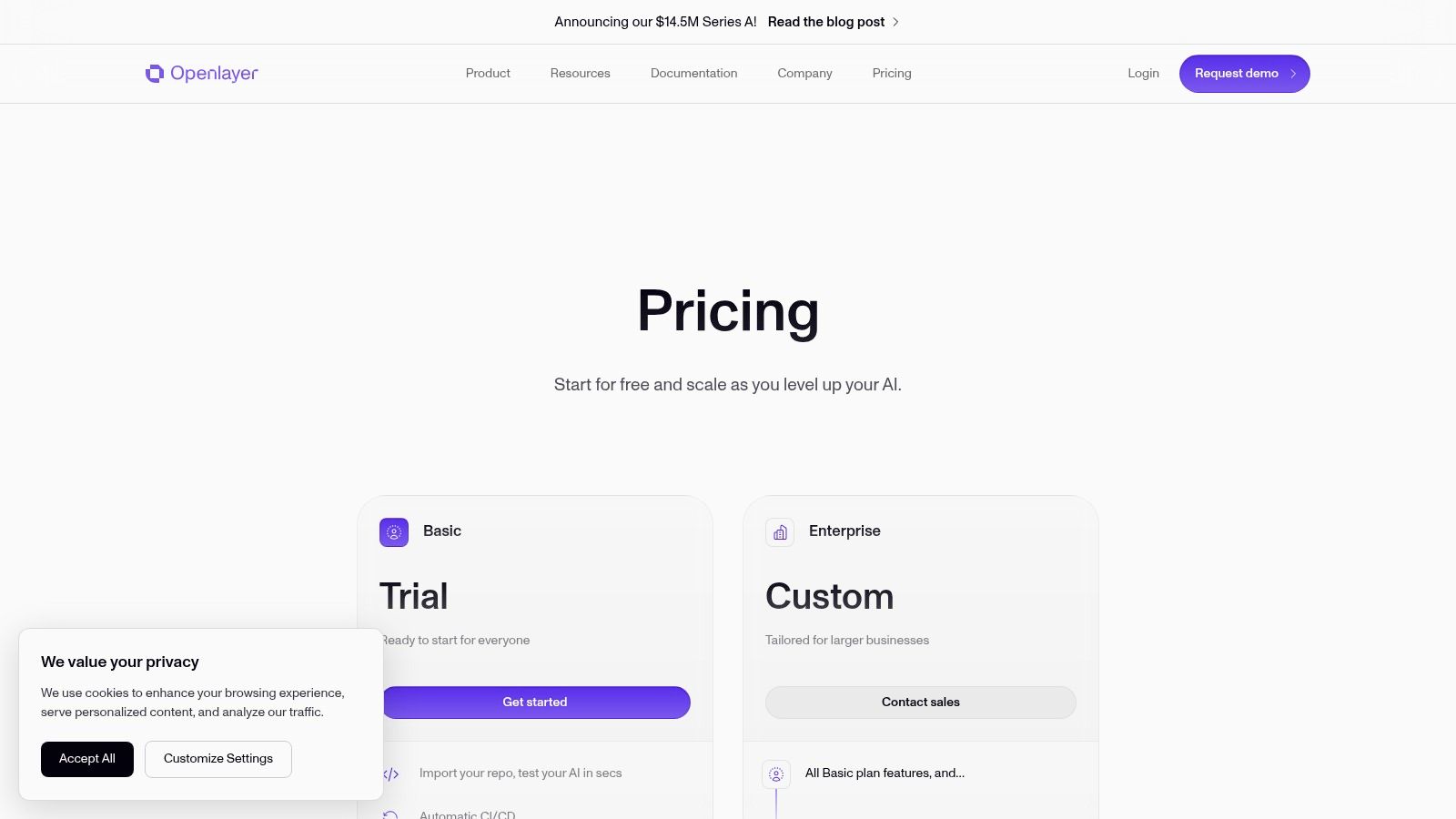

Pricing and Onboarding

Openlayer provides a free "Starter" plan for individuals and small projects, which includes a generous number of evaluations and traces. The "Growth" plan starts at $400 per month, offering more capacity, team collaboration features, and project dashboards. For larger organizations needing advanced security and deployment options like SSO, on premise hosting, and dedicated support, custom "Enterprise" pricing is available upon request. The platform is accessible via SDKs, allowing for a developer centric onboarding process.

7. Vellum.ai

Vellum.ai is an integrated development platform for building production ready LLM applications, combining prompt engineering, testing, and monitoring into a single workflow. It empowers teams to rapidly prototype, evaluate, and deploy reliable AI agents and prompt chains. This makes it one of the best LLM optimization tools for AI visibility by providing a controlled environment to ensure outputs are accurate and consistent before they are released, directly influencing how a brand's information is generated and presented.

The platform's core advantage is its unified toolset, which includes a visual prompt builder, a version controlled prompt registry, and a powerful evaluation suite. This combination allows developers to experiment with different models and prompts, test them against predefined criteria, and deploy the best performing versions with confidence. For businesses, this means less time spent on manual testing and a faster path to deploying high quality AI powered features.

A Unified Platform for Better AI Search Visibility

Vellum.ai stands out by offering a complete lifecycle management solution for LLM applications, from initial experimentation to production monitoring.

- Prompt Engineering and Management: A central registry for creating, versioning, and A/B testing prompts across multiple models.

- Evaluation and Testing: Build custom evaluation suites with unit tests and workflow tests to ensure consistent quality and prevent regressions.

- Built in RAG: Includes a managed vector knowledge base to easily implement Retrieval Augmented Generation for more accurate, context aware responses.

A key benefit is its robust evaluation engine, which enables teams to systematically measure prompt performance against key metrics like accuracy, relevance, and tone. By ensuring that the underlying LLM application is well tuned and factually grounded, you directly improve the quality of generative outputs that may mention your brand, contributing to a stronger and more reliable AI presence.

Pricing and Onboarding

Vellum offers a free "Developer" tier for individual experimentation. The "Startup" plan, starting at $250 per month, provides more generous usage credits, extended data retention, and team collaboration features. For larger organizations, the "Business" and "Enterprise" tiers add advanced governance features like SSO, RBAC, and VPC installation options, along with dedicated support and service level agreements. The platform is designed for a self serve onboarding experience, allowing developers to sign up and start building immediately.

8. Humanloop

Humanloop is an enterprise focused platform for developing, evaluating, and monitoring LLM applications, emphasizing governance, security, and collaboration. It bridges the gap between technical teams and domain experts by providing a unified environment for prompt engineering, model evaluation, and production monitoring. This makes it one of the best LLM optimization tools for AI visibility for larger organizations that require stringent compliance and collaborative workflows to refine their AI driven content and responses.

Its strength lies in its comprehensive, human in the loop system. The platform allows teams to manage prompt versions, run sophisticated evaluations using LLM as a judge, and collect end user feedback to create a continuous improvement cycle. This holistic approach ensures that the LLM's outputs are not only technically sound but also aligned with business goals and user expectations.

Enterprise LLM Tracking for AI Visibility

Humanloop is built for teams where collaboration and governance are paramount, offering features that support complex development and deployment pipelines.

- Collaborative Prompt Management: A multi LLM playground with robust versioning and deployment controls allows engineers and subject matter experts to work together.

- Advanced Evaluation: Run offline and online evaluations using a mix of UI and code first evaluators, including LLM as a judge methodologies.

- Production Monitoring and Feedback: Integrates tracing, logging, alerting, and end user feedback mechanisms to close the loop between development and real world performance.

- Enterprise Grade Security: Offers VPC and self hosting options, along with SOC 2 and HIPAA/BAA support for regulated industries.

The platform is ideal for medium to large organizations that need to maintain high standards of quality and compliance. Its human review workflows are crucial for ensuring that AI generated content is accurate and on brand before it impacts public visibility.

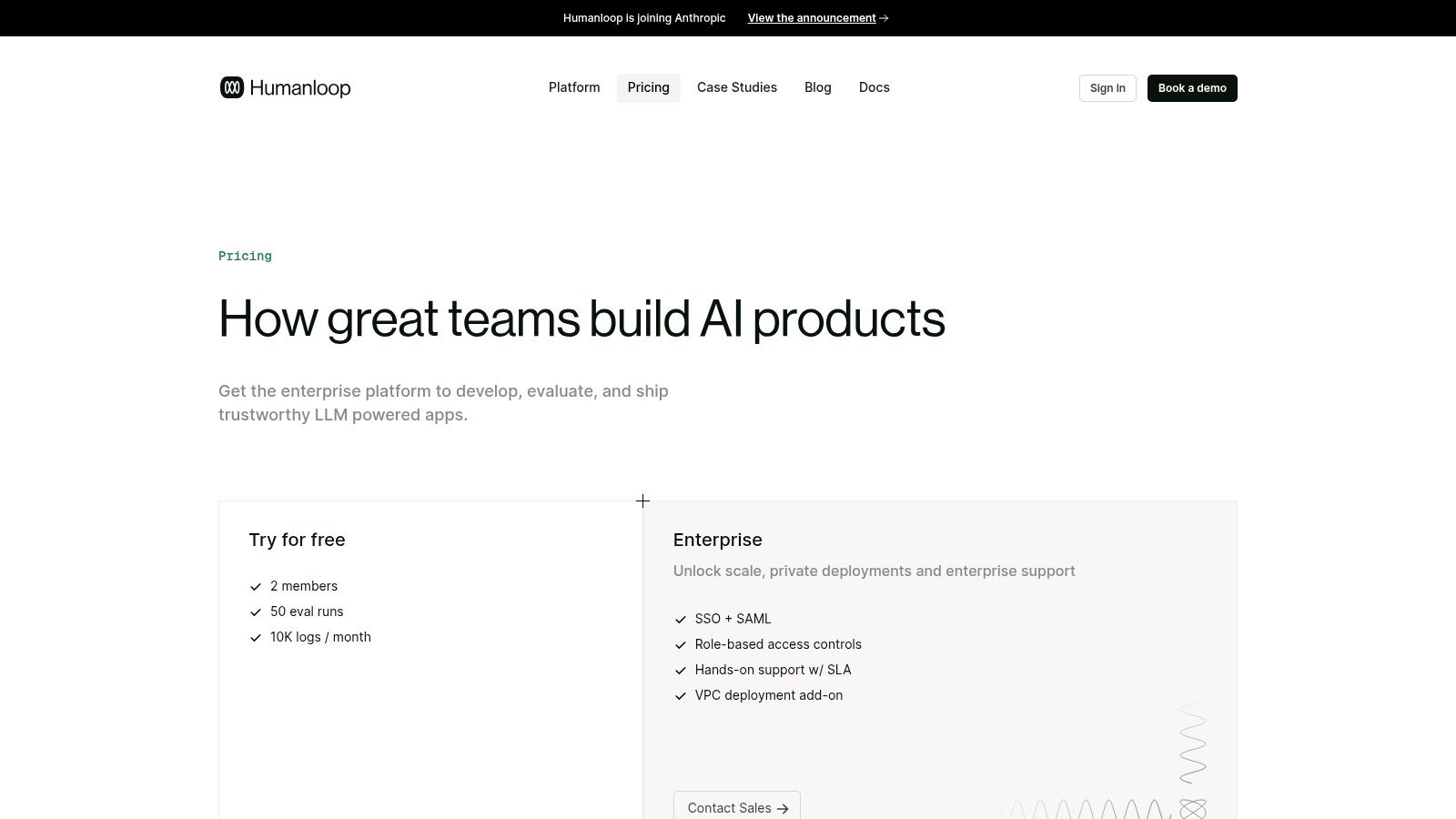

Pricing and Onboarding

Humanloop provides a free tier for individuals and a "Startup" plan starting at $100 per month, which includes core evaluation and monitoring features. For larger teams, the "Growth" and "Enterprise" plans are available by contacting sales, offering advanced features like SSO, dedicated support, and on premise deployment options. The platform is designed for team adoption, with clear documentation to support integration into existing CI/CD pipelines.

9. Arize AI (Phoenix OSS and AX SaaS)

Arize AI offers a dual approach to LLM observability with its open source tool, Phoenix, and its enterprise grade SaaS platform, Arize AX. This combination provides a flexible path for teams, allowing them to start with a powerful local solution for tracing and evaluation before scaling to a fully managed platform with advanced monitoring and dashboards. This makes it one of the most versatile LLM optimization tools for AI visibility, catering to both individual developers and large organizations.

Its strength lies in providing a unified view across development and production environments. Phoenix allows for deep, code level debugging of traces and spans, while Arize AX extends this capability with online evaluations, drift detection, and automated monitors. This end to end perspective helps ensure that models perform reliably and predictably once deployed.

An LLM Optimization Tool for Any Scale

Arize AI is ideal for ML engineering and data science teams who need robust tools for both pre launch evaluation and post deployment performance monitoring. It bridges the gap between experimentation and production.

- Open Source and SaaS Flexibility: Start with Phoenix for local tracing and evaluation, then transition to the managed Arize AX platform for scalable production monitoring.

- Comprehensive Evaluation: Conduct offline and online evaluations using custom metrics to validate model quality and prevent performance regressions.

- Production Monitoring: Track everything from token usage and costs to model drift and data quality with customizable dashboards and automated alerts.

The platform's support for OpenTelemetry standards and its Python/JS SDKs ensures easy integration into existing MLOps workflows. By using Arize to fine tune model behavior and evaluate outputs, you can improve the quality and accuracy of the information your application generates, which directly impacts its visibility and reliability within AI ecosystems.

Pricing and Onboarding

Arize offers distinct pricing for its products. Phoenix is open source and free to use. The Arize AX platform has a limited free tier for a single user with 7 day data retention. The paid "Startup" plan offers more flexibility and is designed for early stage companies, while "Pro" and "Enterprise" tiers provide extended retention, higher usage limits, and advanced features like custom roles and dedicated support. The multiple SKUs may require careful consideration, but the model allows teams to choose the solution that best fits their current needs and budget.

10. Galileo

Galileo is an LLM evaluation and observability platform designed to help teams accelerate development while maintaining high quality outputs in production. It offers a comprehensive suite of tools for tracing, analytics, and setting up guardrails, making it a powerful solution for teams that need deep insights into model performance. This focus on quality and observability makes it one of the best LLM optimization tools for AI visibility, as it helps ensure the underlying model behaves predictably and reliably.

Its platform is built for scale, providing detailed analytics on crucial metrics like drift, quality, and overall performance. By giving developers the tools to monitor and evaluate their models from launch through production, Galileo helps prevent quality degradation over time and ensures consistent, accurate responses.

Improving AI Search Presence with Galileo

Galileo distinguishes itself with enterprise grade features and a generous free tier, making it accessible to both startups and large organizations looking to implement robust LLM operations.

- Advanced Tracing and Analytics: Gain deep visibility into model performance with scalable tracing and dashboards that monitor for drift and quality issues.

- Unlimited Custom Evaluations: The free tier allows for unlimited custom evaluations, enabling teams to thoroughly test and validate model changes.

- Enterprise Ready Deployment: Offers advanced security features like RBAC and deployment options including VPC and on premises for organizations with strict compliance needs.

For teams focused on maintaining brand integrity and factual accuracy in AI generated content, Galileo's guardrails and evaluation engine are invaluable. By setting up automated checks for hallucinations, toxicity, or prompt injections, you can directly influence the quality of information your application generates, which is a foundational step in improving your brand’s visibility in generative AI systems.

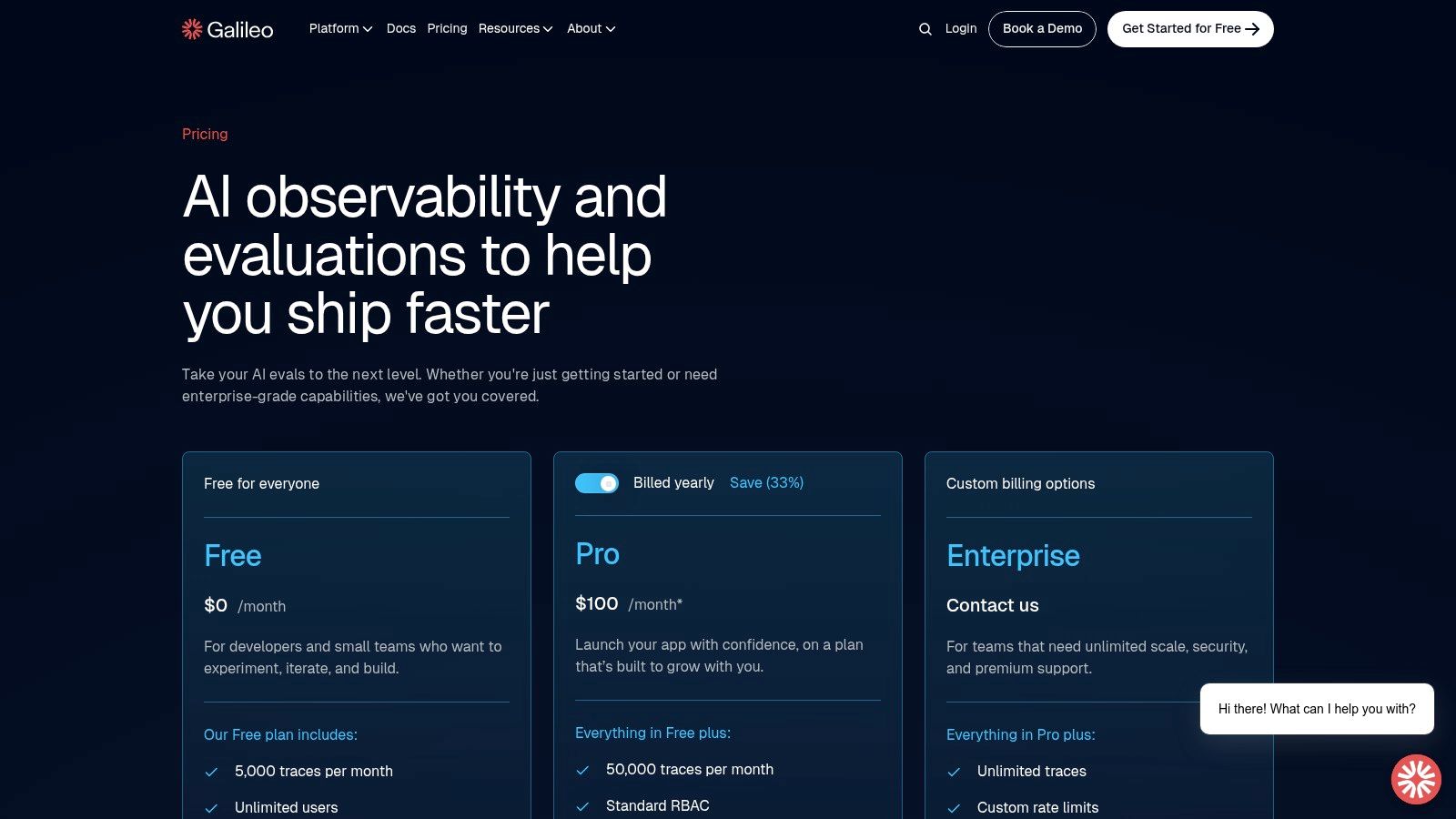

Pricing and Onboarding

Galileo provides a very accessible entry point with its free "Launch" plan, which includes 5,000 traces per month and unlimited evaluations. The "Pro" tier scales up to 100,000 traces per month and adds advanced features like RBAC and priority support via Slack. For larger organizations requiring custom deployments and security, the "Enterprise" plan is available through direct sales consultation. Onboarding is designed to be self serve, allowing developers to integrate and begin monitoring quickly.

11. Hugging Face

Hugging Face serves as the central hub for the open source AI community, providing a vast repository of models, datasets, and evaluation tools. It’s an essential resource for teams looking to benchmark different models, experiment with new architectures, and optimize their applications for cost and performance. By offering a platform to compare and select the most effective models for a specific task, it stands out as one of the best LLM optimization tools for AI visibility, enabling teams to build more accurate and efficient generative systems from the ground up.

Its core value is the sheer breadth of resources available. Teams can quickly find and test hundreds of models to see which one best understands their content or generates the most relevant responses. The platform’s Inference Providers feature simplifies this process by offering pay as you go access and unified billing, making it easy to route requests and manage costs across various model hosting services.

A Community Hub for Enhancing AI Visibility

Hugging Face excels as a launchpad for model discovery and experimentation, allowing teams to make informed decisions before committing to a specific LLM architecture.

- Extensive Model and Dataset Hub: Access thousands of pre trained models and datasets for benchmarking and fine tuning.

- Inference Providers: Utilize a unified, pay as you go system for accessing over 200 models with streamlined billing.

- Evaluation and Analytics: Run community or private evaluations to measure model performance on specific tasks and datasets.

The main advantage is its role as a centralized testing ground. Instead of integrating multiple APIs, developers can use Hugging Face to compare how different models interpret and cite their brand’s information. This foundational work helps ensure the models selected for production are optimized for accuracy, directly impacting brand visibility in AI generated answers.

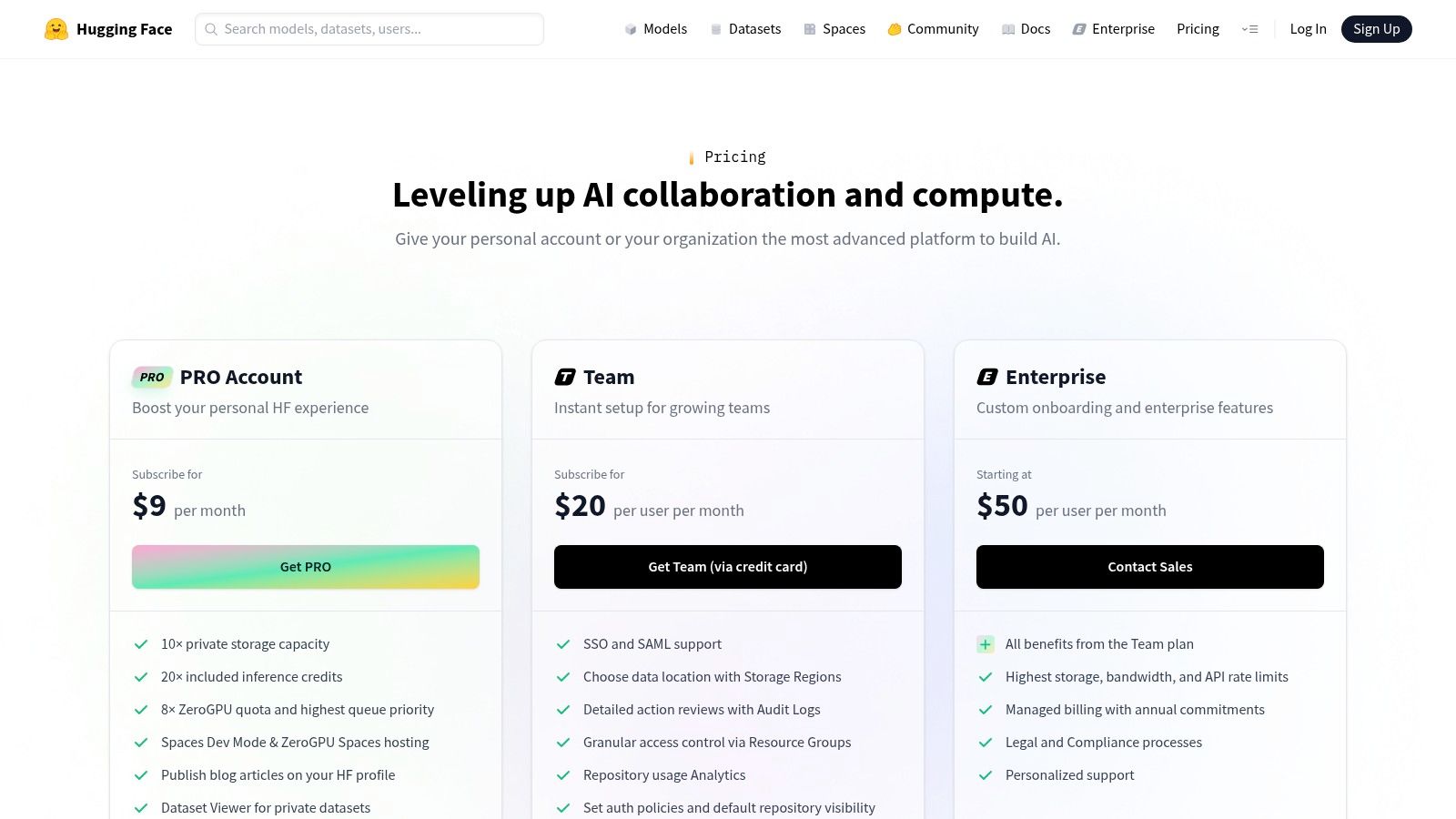

Pricing and Onboarding

Hugging Face offers a free tier for individuals and a "Pro" plan at $9 per month with additional private repositories and compute resources. The "Enterprise" plan provides advanced security features like SSO and audit logs, regional storage, and dedicated support, with pricing available upon request. The platform's built in credit system for inference simplifies budget management for teams experimenting with various models.

12. AWS Marketplace (example: LangWatch Cloud)

AWS Marketplace serves as a centralized procurement channel for enterprises to discover, subscribe to, and deploy a wide range of third party LLM observability and evaluation tools. Instead of being a single tool, it’s a digital catalog that streamlines purchasing solutions like LangWatch Cloud, allowing teams to use their existing AWS billing and procurement workflows. This makes it one of the best LLM optimization tools for AI visibility for organizations already invested in the AWS ecosystem, simplifying the process of finding and managing the right LLMOps solutions.

Its core strength is simplifying the often complex process of vendor evaluation and management. Teams can trial multiple competing platforms through a unified interface, compare features, and make purchasing decisions without navigating separate contracts or billing systems. For large enterprises, this consolidation is a significant operational advantage, enabling faster adoption of critical monitoring and optimization technologies.

Procurement Tools for AI Search Optimization

The features available through AWS Marketplace depend entirely on the individual tool listed, such as LangWatch Cloud. However, the platform itself provides a consistent framework for procurement.

- Centralized Procurement: Find, buy, and deploy LLMOps software using your AWS account and consolidated billing.

- Simplified Trials: Easily test and compare multiple observability tools from different vendors in one place.

- Enterprise Grade Integration: Leverages existing AWS agreements, private offers, and integrations for seamless adoption.

A key benefit for enterprise users is the ability to leverage private offers and standardized invoicing, which fits neatly into established IT governance and financial processes. This streamlined approach allows teams to focus more on optimizing their LLM applications for better performance and visibility and less on the administrative overhead of sourcing new software.

Pricing and Onboarding

Pricing on AWS Marketplace is set by the individual vendors and varies widely. Some listings offer free trials, pay as you go models, or fixed monthly subscriptions, while others require contacting the vendor for a custom enterprise quote. The main advantage is that all transactions are handled through your AWS bill. Onboarding is also vendor specific, but the Marketplace facilitates the initial subscription and deployment process, often with just a few clicks.

Comparing the Best LLM Optimization Tools for AI Visibility

To help you decide, here is a breakdown of the top platforms based on their primary focus, key features, and ideal user. This comparison should clarify which solution best fits your organization's generative SEO and LLM tracking needs.

| Tool | Primary Focus | Key Feature | Ideal User | Pricing Model |

|---|---|---|---|---|

| Riff Analytics | Brand citation & AI visibility tracking | Multi engine citation source mapping | SEO & Brand Teams | Free Trial, Contact for Plans |

| LangSmith | LLM app debugging & tracing | Deep integration with LangChain | LangChain Developers | Usage based |

| Weights & Biases | ML experiment & LLM app monitoring | Unified model and app telemetry | MLOps Teams | Per Seat, Enterprise Plans |

| Langfuse | Open source LLM observability | Self hosting and OSS community | Devs needing control/privacy | Free Tier, Usage based Cloud |

| Datadog | Full stack infrastructure & LLM monitoring | Correlation with APM & logs | Enterprises on Datadog | Usage based, by volume |

| Openlayer | Automated pre-production evaluation | Git based CI/CD regression testing | Engineering & Product Teams | Free Tier, Paid Plans |

| Vellum.ai | Prompt engineering & versioning | Visual prompt builder and registry | Teams needing rapid prototyping | Free Tier, Paid Plans |

| Humanloop | Enterprise LLM governance & monitoring | Human in the loop feedback workflows | Large, regulated orgs | Startup Plan, Enterprise via Sales |

| Arize AI | OSS & SaaS LLM observability | Phoenix (OSS) & Arize AX (SaaS) | ML Engineering Teams | Free Tier, Paid Plans |

| Galileo | LLM evaluation & quality monitoring | Guardrails & drift detection | Teams focused on model quality | Generous Free Tier, Pro Plans |

| Hugging Face | Model discovery & benchmarking | Vast model & dataset repository | Researchers & ML Developers | Free Tier, Pay as you go inference |

| AWS Marketplace | Centralized software procurement | Unified AWS billing for 3rd party tools | Enterprises using AWS | Varies by Vendor |

Summary and Final Recommendations

Navigating the new landscape of AI visibility requires a deliberate strategy and the right set of tools. We have explored a range of solutions, from developer focused observability platforms like Langfuse and Datadog to brand centric monitoring tools like Riff Analytics. The core takeaway is that achieving success in generative AI search is not a passive activity. It demands active management, measurement, and optimization.

Your choice of tool should align directly with your primary objective. If your goal is to build and debug complex AI applications, platforms with deep tracing and evaluation capabilities are essential. If your focus is on ensuring your brand's content is accurately cited and visible in AI search results, you need a tool built for citation tracking and competitive analysis. For most organizations, a combination of tools will be necessary to cover the full lifecycle, from development to brand monitoring. By selecting the right tools, you can transform the challenge of AI driven search into a significant competitive advantage.

Frequently Asked Questions

What is the most important metric for AI visibility?

The most critical metric is citation share. This measures how often your brand's content is used as a source in AI generated answers for relevant queries compared to competitors. A high citation share indicates that AI models view your content as authoritative and trustworthy, directly impacting your brand's visibility and influence.

How do I optimize my content for AI search engines?

Optimizing for AI involves creating clear, factual, and well structured content. Use semantic HTML (like schema markup), write in a natural and unambiguous language, and ensure your information is supported by clear evidence and sources. The goal is to make it as easy as possible for a machine to understand, verify, and cite your content accurately.

What is the difference between LLM observability and AI visibility tools?

LLM observability tools (like Langfuse or Datadog) are developer focused platforms used to monitor the internal performance of an AI application, such as latency, cost, and output quality. AI visibility tools (like Riff Analytics) are marketer focused platforms used to monitor a brand's external presence across public AI engines, tracking mentions, citations, and competitor performance.

Can I use traditional SEO tools for AI visibility?

While traditional SEO tools are excellent for tracking keyword rankings on SERPs, they are not designed to measure brand presence within AI generated answers. You need specialized LLM optimization and AI visibility tools to track citations and mentions inside platforms like ChatGPT, Perplexity, or Google's AI Overviews, as these do not operate like a standard list of blue links.

How can small businesses start with AI visibility optimization?

Small businesses can start by focusing on a specific niche and creating high quality, expert content that answers common customer questions directly and factually. Use a free tool to track mentions for a small set of core brand and product queries. This provides a baseline to understand how AI engines perceive your brand and identifies the most critical content gaps to address first.